In this project, we developed a tool which can help detect failures in a virtualized environment. Besides detecting failures, it could also provide useful information regarding, for example, whether certain resiliency policy worked as it was desired to. Thus, it can help the corresponding engineer to better prioritize their time (for example, after some failure, the resiliency policy worked perfectly (i.e., no impact), then the corresponding engineer might not need to fix the bugs that caused the failure immediately, if he/she happened to have other tasks at hand.)

QPS is an “add-on” scheduling algorithm for input-queued crossbar switches. It builds upon “simple” Queue-Proportional Sampling, and achieves $O(1)$ time complexity per port. It can boost the performance (throughput or delay or both) of a large amount of existing switching scheduling algorithms (e.g., iSLIP, SERENA).

ForestStream is a novel measurement algorithm suite which combined streaming algorithm techniques and graph sampling techniques. Unlike traditional graph sampling techniques, ForestStream can unbiasedly sampling certain portion of trees from a graph forest, thus is able to achieve much higher accuracy than traditional graph sampling techniques.

In this post, we will deal with the following XOR problem. Given $l$ consecutive nonnegative integers which start from $s$, i.e., $s, s+1, \cdots, s + l - 1$, you are asked to get the XOR of them, i.e., $XOR_{x\in [s+l-1]\setminus [s-1]}x$, where $[s]={1,2,\cdots,s}$. An algorithm with complexity $O(l\log (s + l))$ is straightforward: XOR these numbers one by one (see the following pseudo-code).

def XOR(s, l):

x = 0

for s in xrange(s, s + l):

x ^= s # note, for general case, you have to implement your own XOR for long-bit integers

return x

Logarithmic Solution

Now, let’s see a logarithmic solution, i.e., an algorithm with complexity $O(\log (s + l))$.

As we know, XOR of multiple single-bit variable (i.e., $0$ or $1$) equals $1$ if the number of $1$’s is odd, otherwise $0$. Now, we decompose the XOR of $[s+l-1]\setminus [s-1]$ by bits, for the $i^{th}$ bit, we only need count how many 1’s. It appears that we still need $O(l)$ time to do the counting. However, the consecutiveness of these numbers make the appearance of $1$’s and $0$’s periodic. For example, for the $3^{rd}$ bit (from the lowest bit), roughly speaking the pattern is as follows.

\[\cdots,0,0,0,0,1,1,1,1,0,0,0,0,1,1,1,1,\cdots\]Of course, you need pay a little attention to the beginning and ending parts, since $l$ might not divide $2^3$.

Generally,

- for the $k^{th}$ ($k>1$) bit you only need to count how many $1$’s are they in the first and last partial period (if applicable). Since there are always even number of $1$’s in a full period.

- for the first bit, clearly $1$’s appear $\lfloor\frac{l - b}{2}\rfloor + b$ times

The following pseudo-code shows how to XOR the $i^{th}$ bit. Clearly, it can be done in $O(\log(s + l))$, as there are at most $\log (s + l)$ bits, therefore, this algorithm can calculate the XOR in $O(\log^2(s+l))$ time. Note that, before calculating the XOR bit-by-bit, you should first get these bits and after finishing the XOR, you should assemble these bits into a decimal number. Clearly, these two operations can be done in $O(\log(s+l))$.

# base = 2 ** i

# bit is the value of the i-th bit

# r[i] is the i-th bit for the result

if base == 1:

r[i] = ((l - bit) / 2 + bit) % 2

else:

period = 2 * base

preamble_len = period - bit * base - s % base

preamble = min(l, preamble_len)

if bit == 0:

preamble = max(0, preamble - (base - s % base))

padding = 0

if l > preamble_len:

padding_len = (l - preamble_len) % period

padding = padding_len - base if padding_len > base else 0

r[i] = (preamble + padding) % 2

Fastpass is a Datacenter network framework proposed by Jonathan Perry, et al.. Its authors published its codes, however, I encountered some issues while installing it on Ubuntu 16.04. Here is Long’s vagrant VM for building Fastpass.

-

Issue 1: gcc version issue

-

error:

include/linux/compiler-gcc.h:86:30: fatal error: linux/compiler-gcc5.h: No such file or directory - cause: the kernel version of my Ubuntu (i.e., Ubuntu 16.04) is higher than the kernel version used by fastpass (i.e., v3.10.25).

-

solution: before

make -j22addingecho "#include <linux/compiler-gcc4.h>" > include/linux/compiler-gcc5.h - source: compile-a-linux-2-6-kernel-module-with-newer-compiler

-

-

Issue 2: Berkeley DB issue

-

error:

E: Unable to locate package libdb6.0-dev E: Couldn't find any package by glob 'libdb6.0-dev' E: Couldn't find any package by regex 'libdb6.0-dev' - cause: it seems there is no package

libdb6.0-devfor Ubuntu 16.04 (I only foundlibdb6.0-devfor Ubuntu 14.04) - solution: replace

libdb6.0-devwithlibdb-dev(if you still see the similar error, then you might need add the source to your/etc/apt/source/lsit)

-

As one of the most popular modern version control tools, git is getting more and more popular. As a result, Github becomes a necessary part of programmers’ daily life. In this post, we will introduce some tricks to help programmers play with Github. These tricks are extremely useful, when you are dealing with multiple Github accounts (e.g., one for company codes, one for personal codes).

Avoid Password with SSH: Single Account Case

The first trick is to avoid entering password while pushing local repositories to remote or pulling/fetching private remote repositories.

Generating SSH Key

$ ssh-keygen -t rsa -b 4096

Generally, we will see outputs as follows.

Generating public/private rsa key pair.

Enter file in which to save the key (/home/mininet/.ssh/id_rsa): git_key_example

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in git_key_example.

Your public key has been saved in git_key_example.pub.

The key fingerprint is:

38:44:30:a8:a4:6f:eb:c1:66:12:bb:28:dd:bd:7c:29 mininet@mininet-vm

The key's randomart image is:

+--[ RSA 4096]----+

| .o.. |

| .. o |

|o. . |

|o . . |

|.. o S |

| +o . |

|oo=o . . |

|o=o...E o |

|+.. o+ |

+-----------------+

During the above process, you will be asked to

- enter the file name for your key pair (In the above example, we used

git_key_exampleas the file name.). If you only enter the file name, the key pair would be stored in current directory. If you want to store your key in somewhere else, you can add the full path of your file. - Enter a passphrase (If you use SSH out of security, then we strongly suggest you enter one. However, if you choose SSH just because you do not want to enter the password, then you are suggested to leave it empty. Because otherwise you will be asked to enter the same passphrase whenever you

git push.).

After the above process, you will find the key pair in the directory you enter. In the above example (assume current directory is /home/mininet/.ssh/), you will find the following two new files:

- git_key_example

- git_key_example.pub The first one is the private key and the second is the public key.

Upload to Github

-

Prepare (get the content of public key):

$ cat git_key_example.pub ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDEKiWm5RBjQJOsNmGi6GQNAqhoovXA/qVm1i+nyZ0j18kQkCaeTSdGDUs9UiV9ts7vG4MLHucRLI4dh5hvOH3gr0W7MhB8JubOptKJWtjGaw4f1Vb3xxdKGJ7igHA/ud2N5omkJIptyKXrvybLkqnkLcGgxttvqheOGczzC2g0MIa7MxqhlALkdj0QVyjrtbqltB+k0+lAqyeLRJGrWqmRZ8FlPn1KslpWKq+LFAuKNg24YF7HxbGubdVVeGsKTOowrDBN5IGdEJvlqwZsVUvGnx1oznJzpfdw80KCcm1vFmMU6E3oo3OLfgjhRvcfl5DV+OznTPPr3KbybNSAUIr5lR00LkBhKH0nxiHcERi9/E6/95/fVovY8vV38wPLXBCq/jkmQmtUFHQ86C/1f0bgAAano56Ovd2p7ur5x2pe+sCUxwcQQ3kYOpesqQhCvpkIA6kDGznsGiY1418sCj8D7na1MoifvzsPfpAbO8zAO0YtITEfaaiBum9i4GbzMTl4AzuLIff03XhPRHlew/9McG6DlKm04A96QmRbUZQHzdUyivOSpG7HCFBrYmiEga1EPV8WRRU8SJu0hftV0Upk2T46KASygvo5A0wZ6YUWytHhY8Sdtrx56y4mOtol3RkQtR/vUGCjxIbtl3Lw1J3xoxQkxynesUM8bopBgkwxiQ== mininet@mininet-vm -

Open the browser and go to

github.com, thenLog in

Find “SSH and GPG keys” in “Personal settings”

“New SSH key”

Paste the content of your public key.

Add Your SSH Key to ssh-agent

$ eval "$(ssh-agent -s)"

$ ssh-add /path/to/your_ssh_key

To avoid to enter the above commands every time after you restart your computer, you can add them in your bash configuration file (e.g., ~/.bashrc for Ubuntu, ~/.bash_profile for OS X).

Multiple Accounts Case

Assume you have two github account, for ease of presentation, we refer to them as A and B.

- Configure SSH keys for A and B, respectively by the above approach

-

Edit

~/.ssh/configand add the following sentences at the end of the file:Host github_A HostName github.com User git IdentityFile path/to/ssh-private-key-for-A Host github_B HostName github.com User git IdentityFile path/to/ssh-private-key-for-B -

Change

github.comto the corresponding host name you configured above. Suppose the remote repository associated with account A isgit@github.com:A/example.git:$ git remote add remote_A git@github_A:xlong88/publications.git $ ... $ git push remote_A masterSimilar for B.

PDF has become one of the most popular file type used on our electronic devices. However, the management of PDF files is not an easy task, especially when you have too many PDF files. For example, as a undergraduate/graduate student, you might obtain bunch of lectures in PDF format for a certain course (say Calulus). After you have taken this course for a long while, we encountered some concepts in Calculus, but you did not remember the details behind them. However, you are $100%$ sure that they must be in certain lectures (but you are not sure which lectures they locate in). At this time, you might want a tool to combining these lectures into a single file such that you can easily locate them.

In this post, we introduce a simple tool that can provide you a user-friendly GUI to combine multiple PDF files into a single one.

Dependencies

- PyPDF2

- Tkinter

Remarks: In the future, to support drag and drop, we might switch to Java.

Usage

- Clone from Github:

git clone -b gui https://github.com/lgong30/pdfMerge - Go to the repository:

cd pdfMerge - Install dependencies:

pip install -r requirements.txt(Note that, if you get permission denied errors, please add asudobeforepip) - Give the python script the permission to execute:

sudo chmod +x window.py - Run:

./window.py

TODO

- Add per-file bookmarks while merging

- Improve the “look” of this application

- Add the support for drag-and-drop

If ever played with GIT, then you might encountered a tedious situation, where you need create a .gitignore file for every repository even if many of your repositories share the same .gitignore file. Some of you might have stored these .gitignore files for various projects, however, the manual copy and paste are still tedious. Are there any tools that can help us “play against” .gitignore easier? In this post, we will introduce you one of such tools.

Requirements

Install & Usage

Please refer to the corresponding “official site” of Sublime Text or Sublime-Gitignore.

Customization

Of course, the template provided in Sublime-Gitignore might not satisfy your requirements. Then, you can customize the templates. Before that, you need another sublime text plugin (at least for sublime text 3), that is PackageResourceViewer.

- First, you need extract the package

Sublime-Gitignore(HOWTO: please refer to the “official site” of PackageResourceViewer) - Second, dive into the directory of all templates in

Sublime-Gitignore(i.e., the sub-directoryboilerplatesunder the root directory of packageSublime-Gitignore) - Third, create or edit the corresponding

.gitignoretemplate

If you are using BasicTex on your Mac, you might get frustrated when you get errors on missing packages, especially when the latex source using too many packages that you did not install on your Mac. Is there any tools that could help you automatically install those missing packages. YES! texliveonfly provides you such capability. However, you might want this feature to be integrated into your text editor such as Sublime Text.

In this post, we help you design a sublime text plugin for supporting texliveonfly.

Usage

Press cmd+shift+f (unfortunately, current version needs you start your sublime text with the privilege of a superuser, in the future, we will try to figure out how to avoid this)

Installation

git clone https://github.com/lgong30/TexliveOnTheFly ~/Library/Application\ Support/Sublime\ Text\ 3/Packages/TexliveOnTheFly

TODO

- Provide customization feature

- Avoid the requirement for super user privilege

Recently, I have learned about the move semantics of C++ 11 from Bo Qian’s YouTuBe Channel. As an “old boy” that is curious to all kinds of new techniques, I definitely would give myself a try.

Define a Class

First of all, I create the following C++ class (which is similar to the one used in Bo Qian’s Video), i.e.,, Note that, all “#include” are omitted.

class exampleA {

int *x;

int size;

public:

// default constructor

exampleA(): x(NULL), size(0) {

std::cout << "Default constructor is called" << std::endl;

}

// constructor from a std::vector

exampleA(const std::vector<int>& vec){

size = vec.size();

x = new int[size];

for (int i = 0;i < size;++ i) x[i] = vec[i];

std::cout << "Create exampleA object from vector" << std::endl;

}

// copy constructor

exampleA(const exampleA& other)

{

std::cout << "Copy constructor is called" << std::endl;

size = other.size;

if (size > 0) {

x = new int[size];

for (int i = 0;i < size;++ i) x[i] = other.x[i];

}else

{

x = NULL;

}

}

// move constructor

exampleA(exampleA&& other)

{

std::cout << "Move constructor is called" << std::endl;

size = other.size;

x = other.x;

other.size = 0;

other.x = NULL;

}

// deconstructor

~exampleA() {

if (x != NULL) delete [] x;

x = NULL;

size = 0;

std::cout << "Deconstructor is called" << std::endl;

}

// friend function: overloading operator <<

friend std::ostream& operator<<(std::ostream& os, const exampleA& a);

};

// definition of (or implementation of) overloading operator <<

std::ostream& operator<<(std::ostream& os, const exampleA& a){

for (int i = 0;i < a.size;++ i) os << a.x[i] << " ";

return os;

}

Define two Functions

Then, I defined a function that returns an exampleA object and another function that uses an object from the class exampleA as a parameter as follows.

// function to create an exampleA object

exampleA createObject(){

exampleA a(std::vector<int>(10, 1));

return a;

}

// function uses an exampleA object as a parameter

void passByValue(exampleA a) {

std::cout << a;

}

“Moment to Witness the Miracle”

Next, I though the moment to witness the miracle (Chinese: 见证奇迹的时刻) was coming. I created the following use cases to verify my understanding.

int main()

{

exampleA a(std::vector<int>(10, 1));

passByValue(a);

std::cout << "======================================\n\n";

passByValue(createObject());

return 0;

}

Before “witnessing the miracle”, let us first do some simple analysis to figure out what the “miracle” is. According to the above use cases, we first create an exampleA object – i.e., a – from an std::vector. Then we passed a to the function passbyValue by value, since a is a lvalue, we would expect the copy constructor to be called. And then, we passed a rvalue createObject()

to the function passbyValue, we would expect the move constructor to be called.

However, the following presents the output from running the above example (by using g++ -std=c++11 example.cpp -o example && ./example)

Create exampleA object from vector

Copy constructor is called

1 1 1 1 1 1 1 1 1 1

Deconstructor is called

======================================

Create exampleA object from vector

1 1 1 1 1 1 1 1 1 1

Deconstructor is called

Deconstructor is called

Unfortunately, we failed to witness the most important part of the miracle, i.e., the move semantics.

Dig Out of The Chief Culprit

After Google, Google, … and Google again, I eventually found “the chief culprit”. It is the copy elision feature of the compiler. Now, it is really the moment to witness the miracle. The following gives the final outputs after disabling the copy elision (add a flag -fno-elide-constructors, that is to run g++ -fno-elide-constructors -std=c++11 example.cpp -o example && ./example).

Create exampleA object from vector

Copy constructor is called

1 1 1 1 1 1 1 1 1 1

Deconstructor is called

======================================

Create exampleA object from vector

Move constructor is called

Deconstructor is called

Move constructor is called

1 1 1 1 1 1 1 1 1 1

Deconstructor is called

Deconstructor is called

Deconstructor is called

From the above outputs, you can see that the move constructor was called twice. First call was during the returning from the function createObject, and the other one is during passing value to the function passByValue.

In this post, we investigate how to efficiently delete certain elements from a Python list. More specifically, you want to delete certain elements, whose indices are given.

Solution A: Move to A New List

def removeA(myList, removalIndices):

if not isinstance(removalIndices, set):

removalIndices = set(removalIndices)

return [v for i, v in enumerate(myList) if not (i in removalIndices)]

This solution moves elements that do not need remove to a new list. Clearly, it would not be quite efficient when the number of removals is much less than the length of the original list. Besides, if the indices of the removals are not stored in a set but a list, the conversion from list to set would further drop the efficiency.

Solution B: Remove From The Last

def removeB(myList, removalIndices):

for removalIndex in removalIndices[::-1]:

del myList[removalIndex]

return myList

This solution removes the elements from the last (the one with the largest index) to the first (the one with the smallest index). This solution assumes that the indices of removals are sorted in an ascending order. Note that, removing from the first one is not a good idea, since each removal will change the indices of the remaining removals. In other words, for removals other than the first one, you have to recalculate the index before removing.

Solution C: Shift Removals Together Then Remove

def removeC(myList, removalIndices):

nextRemovalIndex = removalIndices[0]

indexOfRemovalIndices = 1

numOfRemovals = len(removalIndices)

lenOfList = len(myList)

currentIndex = nextRemovalIndex + 1

while currentIndex < lenOfList:

nextIndex = removalIndices[indexOfRemovalIndices] if indexOfRemovalIndices < numOfRemovals else lenOfList

while currentIndex < nextIndex:

myList[nextRemovalIndex] = myList[currentIndex]

nextRemovalIndex += 1

currentIndex += 1

indexOfRemovalIndices += 1

currentIndex += 1

# pay attention

myList[-numOfRemovals:] = []

return myList

This solution first shifts all the removals to the end of the list, and then removes them. Note that, this solution also assumes the indices of removals are sorted in an ascending order. Compare to Solution B, it guarantees that every element that needs move just moves once.

Which One Will Be More Efficient?

- Dense Cases (

myListhas $10^6$ elements, removing $80\%$)-

Random locations

Solution A (with

listrepresenting removals, i.e.,removalIndicesis alist): 159.12msSolution A (with

setrepresenting removals, i.e.,removalIndicesis aset): 134.69msSolution B: 1.13ms

Solution C: 132.39ms

Remarks: during the measurements, I found that the

setcreate from different status (e.g., sorted or unsorted) of alistwill have different efficiency. I will investigate this in details in future. -

From the beginning

Solution A (with

listrepresenting removals, i.e.,removalIndicesis alist): 187.58msSolution A (with

setrepresenting removals, i.e.,removalIndicesis aset): 133.30sSolution B: 7403.70ms

Solution C: 221.80ms

-

From the ending

Solution A (with

listrepresenting removals, i.e.,removalIndicesis alist): 133.34msSolution A (with

setrepresenting removals, i.e.,removalIndicesis aset): 142.03sSolution B: 0.74ms

Solution C: 121.12ms

-

- Sparse Cases (

myListhas $10^6$ elements, removing $0.2\%$)-

Random locations

Solution A (with

listrepresenting removals, i.e.,removalIndicesis alist): 134.81msSolution A (with

setrepresenting removals, i.e.,removalIndicesis aset): 149.77msSolution B: 216.96ms

Solution C: 159.68ms

-

From the beginning

Solution A (with

listrepresenting removals, i.e.,removalIndicesis alist): 150.39msSolution A (with

setrepresenting removals, i.e.,removalIndicesis aset): 153.45msSolution B: 2098.12ms

Solution C: 182.74ms

-

From the ending

Solution A (with

listrepresenting removals, i.e.,removalIndicesis alist): 131.15msSolution A (with

setrepresenting removals, i.e.,removalIndicesis aset): 134.47sSolution B: 204.69ms

Solution C: 163.19ms

-

TODO

- analyze the above results

- efficiency of set creating from different status of a list

- while loop versus splicing

TiKz provides a very efficient way for us to “program” our figures. However, sometimes you might only want to share your figures but not the source codes of your figures with other for some reasons (e.g., the source code uses a large amount of TiKzlibrary, other people might not have these libraries in the machine or you just want to make your codes private). In this post, we present a way to generate eps figure from TikZ source code (Of course, you can also generate a PDF and let others include that PDF).

LaTeX Code

\documentclass{article}

\usepackage{tikz}

%% put tikzlibrary below if necessary

% set up externalization

\usetikzlibrary{external}

\tikzset{external/system call={latex \tikzexternalcheckshellescape -halt-on-error

-interaction=batchmode -jobname "\image" "\texsource";

dvips -o "\image".ps "\image".dvi;

ps2eps "\image.ps"}}

\tikzexternalize

\begin{document}

%% put your tikz code below or input your tikz code via \input

\end{document}

Usage

latex -shell-escape LATEX_FILE_NAME

Please make sure you are using latex engine, other engines

will not work. Besides, please make sure all required tools

are installed.

SSH is a commonly used remote-access solution. The popularity of various cloud based solutions brings “some challenges” in the usage of SSH to remotely access the servers in the could. Because, you might have a lot of VMs in the cloud, and they might have different IP address/URL, username and passport. This would become a nightmare if you only know the “elementary” SSH commands.

In this post, we will introduce you some “advanced” SSH configurations. For ease of presentation, we assume you have two VMs in the cloud, their identities are as follows:

- A

- domain name: hostA.com

- username: userA

- password: passwdA

- B

- domain name: hostB.com

- username: userB

- password: passwdB

Note that, unless explicitly stated, we assume all the configurations are on your local computer.

Generate SSH key

Please refer to Play with Github

Upload SSH Public Key to VM A/B

Copy the content of your public key and add it to the end of the file ~/.ssh/authorized_keys on VM A (note that if the file does not exists, you need create one.).

Similarly, upload SSH public key to VM B.

Configure Local SSH

Add the following content at the end of your ssh configuration file, i.e., ~/.ssh/config

Host A

HostName hostA.com

User userA

IdentityFile path/to/ssh-private-key

Host B

HostName hostB.com

User userB

IdentityFile path/to/ssh-private-key

Now, you can remotely access VM A simply by ssh A.

More Advanced Tricks To Be Appeared

- Showing GUI

- Showing GUI from a VM behind NAT

- Creating SSH tunnels to “bypass” access gateway

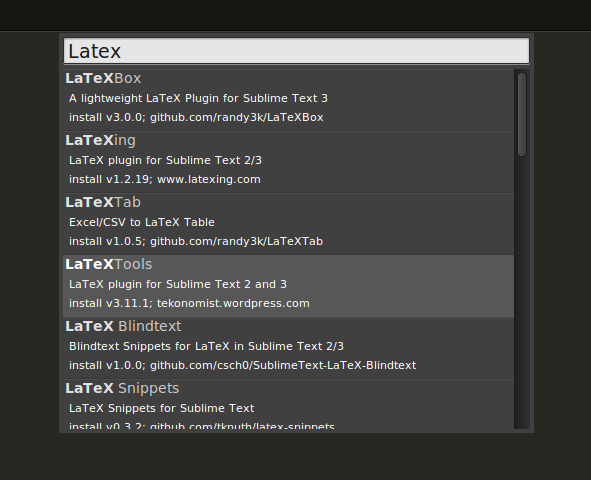

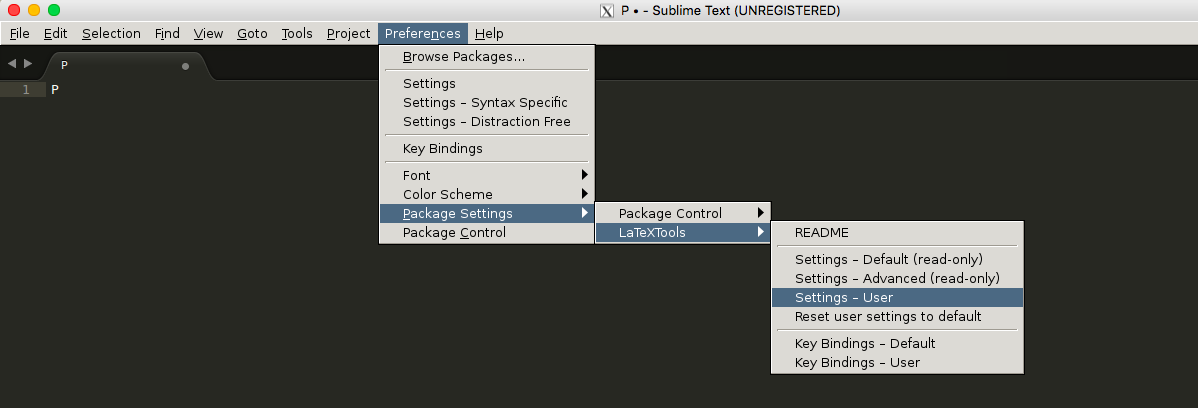

This post details the steps for building and using a “LaTeX IDE” with Sublime Tex 2/3 and LaTeXTools. For ease of presentation, we will call sublime text 3 as ST3 for short, similarly we will use ST2 stands for sublime text 2.

Download and Installation

- Download ST3 for your operating system.

- Install

- Window/Mac: GUI install, just doubly check the file you downloaded, and follow the instructions.

- Ubuntu: here assume you have downloaded the deb installation file, open a terminal and type in the following command

sudo dpkg -i path/to/sublime_text_3_deb_file

Configuration

-

Manually (Semi-manually) install Package Control (Note that, Package Control is the only package that you need install manually. It seems that the latest version of ST3, you can also install this package like other packages.)

ctrl + `(command + `if you use Mac) to open the console, and copy the corresponding codes into the console and then pressenter.

-

Install package LatexTools

ctrl + shift + p(command + shift + pif you use Mac) to open the command Platte for Package Control- Find and click “Package Control: Install Package”

- Enter “LaTexTools” to search and click on the “LaTexTools” to install

-

Configuration (optional only if you want to use the customized build script)

- Open the configuration for LaTeXTools and “OK” for all pop-up windows.

- Replace the content with this one

- Open the configuration for LaTeXTools and “OK” for all pop-up windows.

Remark: To use the above customized configuration, you need the following tools (note that, for the latest version of Ubuntu, all tools are built-in in the OS.)

- Ghostscript

- Windows

- Mac:

brew install ghostscript

- PDF Viewer

- Windows: SumatraPDF

- Mac: Skim

Usage

Please refer to LateXTools for Cross Reference in Multi-file LaTeX Projects

In this post, we will introduce a method to help you easily do cross reference in a multi-file latex project (i.e., a latex project where latex source files are distributed into multiple files). For example, the file structure of a multi-file latex project might look like:

path/to/your-latex-project/

main.tex

introduction.tex

related-work.tex

my-work.tex

evaluation.tex

conclusion.tex

reference.bib

figures/

In the above project, you might cross cite for example an algorithm which you define in the file my-work.tex in the file evaluation.tex. Without the assistance of some useful tools, you might have to check the file my-work.tex to make sure which label you have defined for that algorithm. And in some tools, they might automatically remind you all the labels you have defined in the current file, which means they cannot help you in the cross-file cross reference.

In this post, we will describe you an easy method to help you do the cross-file cross reference by using the LaTeXTools plug-in of Sublime Text. Note that in this post, we will focus on the configuration for cross-file cross reference. If you have no idea on how to install the LaTex Tools or even Sublime Text, please ask Google or some other search engine.

Create a Sublime Text Project

Create a sublime text project for your multi-file latex project. If you have no idea on how to create a project in sublime text 2/3, please refer to understanding-projects-in-sublime-text-saving-switching-etc.

Configure Your Project

After you create your project, you will see a auto-created file (named as your-project-name.sublime-project) in your root path of your multi-file latex project. Open the file, and the put the following configurations into the file.

"settings" : {

"TEXroot": "main.tex",

"tex_file_exts": [".tex"]

}

The above configuration assumes, the root latex file of your project is main.tex (if your project use another tex file, please change it correspondingly.). If you really want to make a full understanding of the above configurations, please refer to Project-Specific Settings of the Latex Tools.

The following is for those who are not so familiar with JSON, i.e., the format for the project setting file. Usually, before you do manual changes on the project setting file, its content looks as follows:

{

"folders":

[

{

"path": "."

}

]

}

After you add the configurations, your setting file would look as follows (please pay attention to the comma after the ], it cannot be omitted):

{

"folders":

[

{

"path": "."

}

],

"settings" : {

"TEXroot": "main.tex",

"tex_file_exts": [".tex"]

}

}

Please pay attention to the comma added after the close square bracket (i.e., ]).

Notice

If you have any suggestions or questions regarding this post, please lease your message in the comment session or send e-mail to mr.gonglong@outlook.com directly.

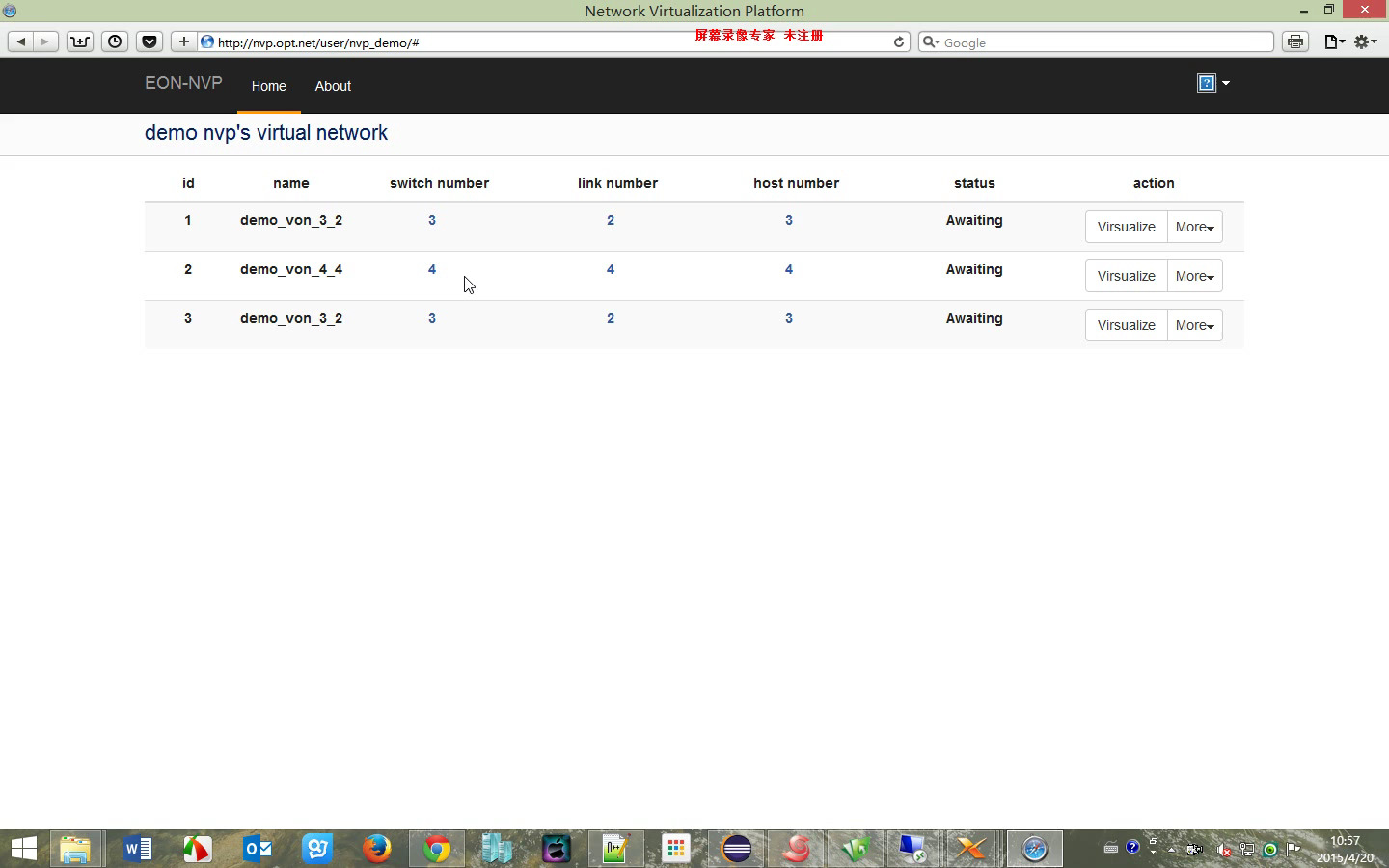

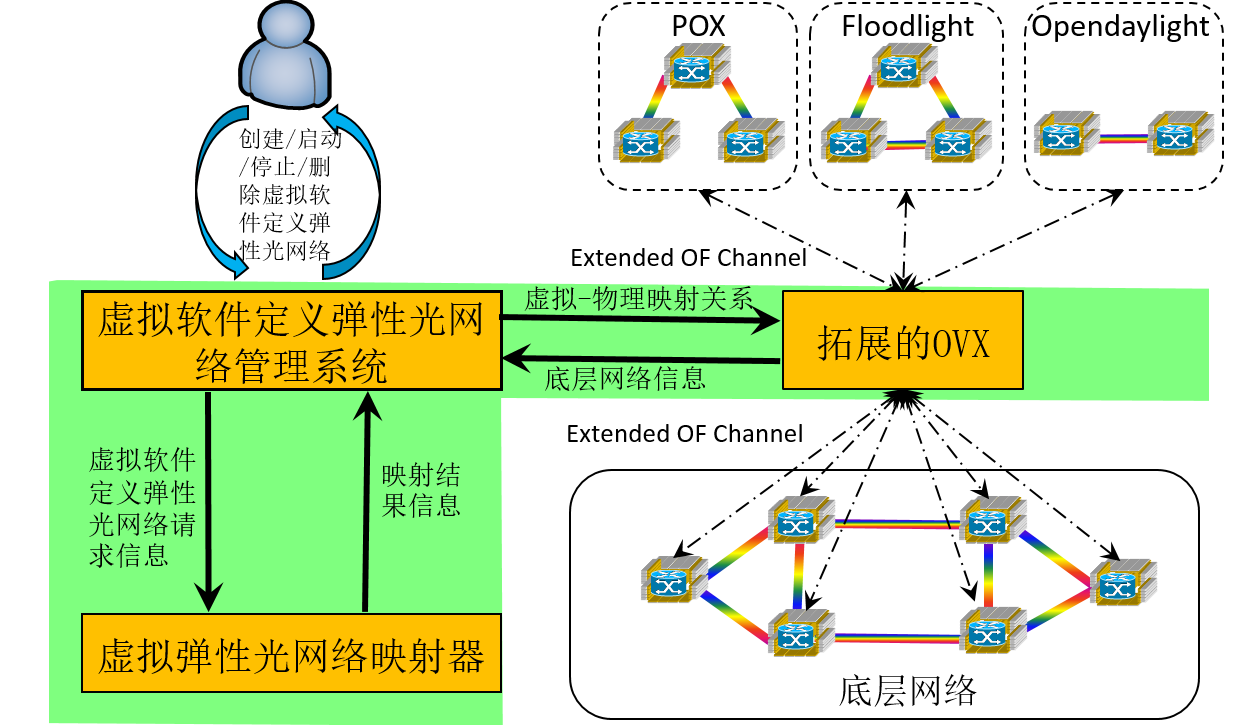

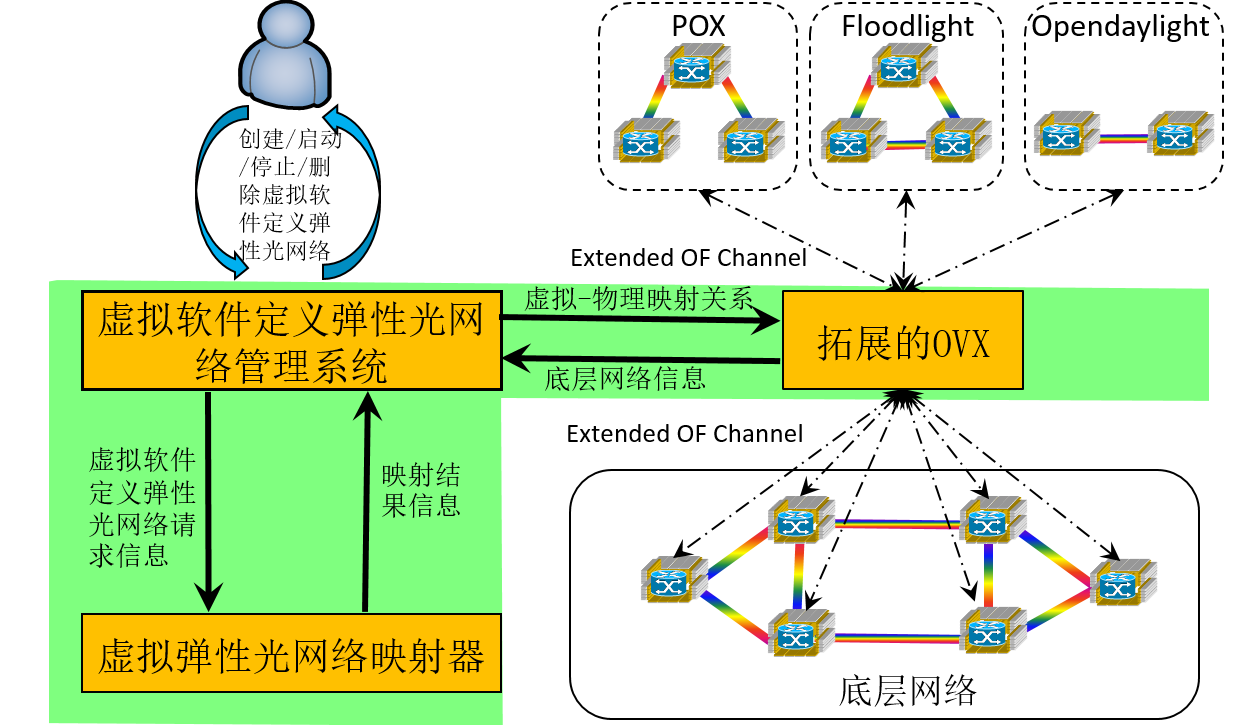

SD-EONVP is a network virtualization platform that provides efficient interfaces for customers to create and manage their virtual networks whose underlying infrastructure is the flexible-grid elastic optical networks. It extends the open-source project OpenVirteX (OVX) in the following three aspects: 1) it supports quantitative bandwidth allocation ( of course only for elastic optical networks) by providing spectral resource virtualization to OVX; 2) it provides high efficient built-in virtual network embedding algorithms; 3) it provides convenient interfaces based on web GUI for customers to create and manage their virtual network.

System Overview

The above figure shows the overview of our network virtualization system. From it, we can see there are three major modules in our system.

-

Virtual Software-Defined Virtual Network Management Module

A Web GUI for users (or customers) to create and manage their virtual networks continently.

More details you can refer to the video demo in the following part and my master thesis

-

Network Hypervisor (Extended OpenVirteX, E-OVX for short)

A openflow-based network hyper-visor supporting for network virtualization

It is based on OpenVirteX, we did protocol extension on it (i.e., making it support quantitative bandwidth allocation for elastic optical network based on the concept of spectral virtualization)

More details you can refer to the official web of OpenVirteX

-

Virtual Network Embedder

The module is to conduct virtual network embedding, which is one of the major challenges in network virtualization. In the platform, we have built in some efficient virtual network embedding algorithms.

Motivations to separating it from Network Hyper-visor

Easily upgrade

Easily debug

Major Principle

-

Topology Virtualization

Based upon LLDP, creating an “illusion” (for the controller of the virtual network) that the topology of the virtual network is the same as what the customer wanted

-

Address Virtualization and Spectral Virtualization

based on OpenFlow, rewriting the addresses and spectral information at the ingress and egress switches to make the controller of the virtual network believe that it “owns” the whole network

More details, you can refer to OpenVirteX official web or my master thesis

A Video Demo

The following video shows how a user applies our network virtualization platform to create and manage their virtual networks.

Model details your can refer to my defense ppt and the system part of my master thesis.

Known Issues

-

Robustness: current version is known to be not very robust

-

Speed: the response time (especially for starting a virtual network) is quite long, as the current VNE module is implemented in MATLAB

Applications

On-Demand and Reliable vSD-EON Provisioning with Correlated Data and Control Plane Embedding

Running time measurement is very important when you want to know the empirical time complexity of your “algorithm” or when you want to provide some progress status of your running program (for example, how long will

your program continue before finish a specific task). In MATLAB, you can use tik tok; in Python, you can use timeit or time package; What about in C++. In this post, we will give a few macros for you to conduct the time

measurement task.

Run Time Measurement Macros for C++

#include <chrono>

#define TIMING

#ifdef TIMING

#define INIT_TIMER auto start = std::chrono::high_resolution_clock::now();

#define START_TIMER start = std::chrono::high_resolution_clock::now();

#define STOP_TIMER(name) std::cout << "RUNTIME of " << name << ": " << \

std::chrono::duration_cast<std::chrono::milliseconds>( \

std::chrono::high_resolution_clock::now()-start \

).count() << " ms " << std::endl;

#else

#define INIT_TIMER

#define START_TIMER

#define STOP_TIMER(name)

#endif

Please make sure that your compiler support C++11, and you do use C++11 or higher to compile your code. For example, if you want to compile your C++ code with C++11 by using g++, you can simply add the following flag: -std=c++11

An Example

The following presents a simple example, which uses the above macros to measure running time (it assumes that the above macros are defined in tm.h).

#include <iostream>

#include "tm.h"

inline int add(int a, int b) {

return a + b;

}

int main()

{

// initialize a timer

INIT_TIMER

int repeat_times = 10000000;

// start the time

START_TIMER

for (int i = 0;i < repeat_times;++ i) {

add(100, 10);

}

// stop the timer and printing out the time information

STOP_TIMER("adding two integers for repeating " + std::to_string(repeat_times) + " times")

return 0;

}

If you run the above code correctly, you should see an output similar like below:

$ RUNTIME of adding two integers for repeating 10000000 times: 19 ms

Git is becoming one of the most popular version control tools. In this post, we introduce some commonly used commands for playing with Git.

Fast Commit

$ git commit -a -m "commit message"

Remember Credential on Windows

- configure for storing your credential

$ git config credential.helper store

$ git push http://example.com/repo.git

Username: <type your username>

Password: <type your password>

several days later

$ git push http://example.com/repo.git

[your credentials are used automatically]

Reset Last Commit

$ git commit -m "Something terribly misguided" # your last commit

$ git reset --soft HEAD^ # reset

It is extremely useful, when you encounter LARGE file problems

Skip Changes in Certain Files

$ git update-index --no-skip-worktree <file>

$ git add -p <file>

$ git update-index --skip-worktree <file>

Ignore Certain Files

If you want skip (ignore) certain type of files, the following configuration can be applied: Edit file “.gitignore”, and add the types you want to ignore, for example,

# ignore thumbnails created by windows

Thumbs.db

# Ignore files build by Visual Studio

*.user

*.aps

*.pch

*.vspscc

*_i.c

*_p.c

*.ncb

*.suo

*.bak

*.cache

*.ilk

*.log

[Bb]in

[Dd]ebug*/

*.sbr

obj/

[Rr]elease*/

_ReSharper*/

Push Local Branch to New Remote Repository

Suppose you have made a empty repository and named it myapp.git, you can:

$ git remote add <branch_name> <repository_url>

where <branch_name> can be any valid name you want. Then, you can push your local branch to the newly created remote repository by using

$ git push <branch_name> master

Resolve Large File Failure Problem

Suppose you git push your local repository to Github, however it failed because of some large-size files. And you might continue to experience git push failure, even if you have removed or ignore such large files. You can use the following commands to resolve such a problem by deleting those files in your history.

$ git filter-branch --index-filter 'git rm -r --cached --ignore-unmatch <file/dir>' HEAD

Of course, by using the above solution, you cannot upload your large file to Github. Recently, Github had released a tool to help you handle with large file. More details, you can refer to An open source Git extension for versioning large files

As a convenient editor for TeX file under Windows, WinEdit is quite popular. However, if you do not get your WinEdit registered (of course, it is not free), you will get annoyed by the popup window, which gets appeared frequently after you have used WinEdit without registration for certain time period. This post provides a hack way to deal with it.

Find Configuration File “Exit.edt”

options -> options interfaces … -> Advanced Configuration … -> Event Handlers -> Exit

the file looks like this:

// WinEdt Exit (Cleanup) Macro

PushTagsandRegisters;

// CloseAppl("YAP"); // Close YAP if running?

// CloseAppl("Complete"); // Close Complete Wizard if running?

// Remove Local ini and edt files if they are empty or the same as global

// Users probably forget to do this before upgrading

// so it is best to keep it tidy as we go...

Exe('%b\Config\Cleanup.edt');

PopTagsandRegisters;

End;

Add Following Sentence Just Before “End;”

RegDeleteValue('HKEY_CURRENT_USER', 'Software\WinEdt 9', 'Inst');

Not sure whether it works for WinEdit 10.

Close WinEdit and Reopen

Notice

Note that, we do not recommend you to use this solution. If you really cannot afford the registration, then Sublime Text together with LaTeXTools would be a good conditionally free substitute. Tis installation and configuration of Sublime Text with LaTeXTools can be found in Play LaTeX Projects with Sublime Text 3.

When uploading papers to EDAS (or other paper submission site), you might encounter a problem which is called as “Not All Fonts are Embedded”. Today, I’ll give you a relatively easy and convenient solution for Windows (for other OSes, solutions are similar).

Steps

Download and Install The Proper Ghostscript

Note that, in the following steps, I will assume the GS is installed at “C:/Program Files/gs”!

Change Configuration of WinEdit

-

Locate the settings of “ps2pdf”: Winedit -> Option -> Exectution Modes -> ps2pdf, as shown in the following figure,

-

Click “Browse for executable…” at the left-bottom, and select the “gswin32c.exe” at “C:/Program Files/gs/gs9.02/bin/”

-

Fill “Switches” entry with the following setting (if it was not empty before filling, then just replace the original one),

-dNOPAUSE -dBATCH -sDEVICE=pdfwrite -dPDFSETTINGS=/prepress -dCompatibilityLevel=1.4 -dSubsetFonts=true -dEmbedAllFonts=trueas shown in the following figure,

-

Replace the original setting in “Parameters” entry with the following one,

-sOutputFile="%N.pdf" -c save pop -f "%N.ps"as shown in the following figure,

-

Click “OK”

EPS figures are very important to scholars. However, making eps figures, especially high-quality ones is not an easy task. Today, we will introduce a simple method easily finish this tough task with visio.

Tools are needed

HOWTO

-

Use Visio to draw a figure (i.e., the figure to be transformed to eps)

-

Print this figure to PDF format in Visio by using Adobe Acrobat Printer. Note that, make sure your figure is located in a single page, and you are suggested to use high-quality printer.

-

Open the PDF generated in Step 2 by using Adobe Acrobat, and saveas “eps”. Note that, though you obtain the eps file already in this step, but usually this file could not satisfy your requirement. Therefore, you need still come to Step 4.

-

Open the EPS generated in Step 3 by using GSview, and then “File”/”PS to EPS”, check “Automatically calculate Bounding Box” if it isn’t, and then press “Yes”. Eventually, you get the desired EPS figure.

Notice

If your figure is very regular (with only certain regular-shape stuffs, for example a combination of rectangles/circles/lines.), Tikz might be a very good tool for producing eps figures. This repository provides some useful examples. If you want more, if can go to TeXample.net. In the future, we will provide some specific examples which we

encountered in some of our projects.

This post introduces how to build a simple MPI cluster with certain UBUNTU machines.

Build MPI Cluster

Here we have 4 nodes running UBUNTU server with these host names: ub0, ub1, ub2, ub3.

Configure hostnames

Edit _/etc/hosts_ like these:

127.0.0.1 localhost

192.168.109.103 ub0

192.168.109.104 ub1

192.168.109.106 ub2

192.168.109.107 ub3

Install NFS

NFS allows us to create a folder on the master node and have it synced on all the other nodes. This folder can be used to store programs. To install NFS just run the following command in the master node’s terminal:

$ sudo apt-get install nfs-server

To install the client program on other nodes, run this command on each of them:

$ sudo apt-get install nfs-client

Sharing Master Folder

Make a folder in all nodes, we’ll store our data and program in this folder.

$ sudo makedir /mirror

And then we share the contents of this folder on the master node to all the other nodes. In order to do this we first edit the /etc/exports file on the master node to contain the following line

/mirror *(rw,sync)

This can be done by using a text editor such as vim or by using the following command:

$ echo "/mirror *(rw,sync)" | sudo tee -a /etc/exports

Now restart the nfs service on the master to parse this configuration once again by using the following command:

$ sudo service nfs-kernel-server restart

Note, then we store our data and programs only in the master node and other nodes are able to access them with NFS.

Mounting /master in Nodes

Now all we need to do is to mount the folder on other nodes. This can be done by using:

$ sudo mount ub0:/mirror /mirror

But it’s better to change fstab in order to mount it on every boot. We do this by editing /etc/fstab and adding the following line:

ub0:/mirror /mirror nfs

and remounting all partitions by using the following on all the slave nodes:

$ sudo mount -a

Defining a User for Running MPI Programs

We define a user with same name and same userid in all nodes with a home directory in /mirror.

Here we name it “mpiu”! Also we change the owner of /mirror to mpiu:

$ sudo chown mpiu /mirror

Installing SSH Server

Run the following command in all the nodes in order to install openSSH server:

$ sudo apt-get install openssh-server

Setting up Passwordless SSH

First we login with our new user to the master node:

$ su - mpiu

Then we generate an RSA key pair for mpiu:

$ ssh-keygen -t rsa

You can keep the default ~/.ssh/id_rsa location. It is suggested to enter a strong passphrase for security reasons.

Next, we add this key to authorized keys:

$ cd .ssh

$ cat id_rsa.pub >> authorized_keys

As the home directory of mpiu in all nodes is the same (i.e., /mirror/mpiu), there is no need to run these commands on all nodes. If you didn’t mirror the home directory, though, you can use ssh-copy-id

To test SSH, you can run the following command on ub0:

$ ssh ub1 hostname

If you are asked to enter a passphrase every time, you need to set up a keychain. This is done easily by installing Keychain by using:

$ sudo apt-get install keychain

And to tell it where your keys are and to start an ssh-agent automatically edit your ~/.bashrc file to contain the following lines (where id_rsa is the name of your private key file):

if type keychain >/dev/null 2>/dev/null; then

keychain --nogui -q id_rsa

[ -f ~/.keychain/${HOMENAME}-sh ] && . ~/.keychain/${HOSTNAME}-sh

[ -f ~/.keychain/${HOSTNAME}-sh-gpg ] && . ~/.keychain/${HOSTNAME}-sh-gpg

fi

Exit and login once again or do a source ~/.bashrc for the change to take effect.

Now your hostname via ssh command should return the other node’s hostname without asking for a password or passphrase. Check that this works for all the slave nodes.

Install GCC

To be able to compile all the code on our master node (it’s sufficient to do it only there if we do inside the /mirror folder and all the libraries are in place on other machines) we need a compiler.

You can get gcc and other necessary stuff by install the build-essential package:

$ sudo apt-get install build-essential

Install Other Compilers

Other preferred compilers should be installed before installing MPICH.

In this step you may install other compilers such as SGI compiler …

Install MPICH2

Now the last ingredient we need installed on all the machines is the MPI implementation. You can install MPICH2 using:

$ sudo apt-get install mpich2

To test that the program did indeed install successfully, enter the following command on all the machines:

which mpiexec

which mpirun

Setting up a Machinefile

Create a file named “machinefile” in mpiu’s home directory with node names followed by a colon and a number of processes to spawn:

ub3:4 # this will spawn 4 processes on ub3

ub2:2

ub1 # this will spawn 1 process on ub1

ub0

Testing

Change directory to your mirror folder and write this MPI hellowworld program in a file mpi_hello.c:

#include<stdio.h>

#inlcude<mpi.h>

int main(int argc, char** argv){

int myrank, nprocs;

MPI_Init(&argc,&argv);

MPI_Comm_size(MPI_COMM_WORLD,&nprocs);

MPI_Comm_rank(MPI_COMM_WORLD,&myrank);

printf("Hello from processor %d of %d\n",myrank,nprocs);

MPI_Finalize();

return 0;

}

compile it:

$ mpicc mpi_hello.c -o mpi_hello

and run it:

mpiexec -n 8 -f machinefile ./mpi_hello

You should now see output similar to:

Hello from processor 0 of 8

Hello from processor 1 of 8

Hello from processor 2 of 8

Hello from processor 3 of 8

Hello from processor 4 of 8

Hello from processor 5 of 8

Hello from processor 6 of 8

Hello from processor 7 of 8

In this post, we will deal with the following XOR problem. Given $l$ consecutive nonnegative integers which start from $s$, i.e., $s, s+1, \cdots, s + l - 1$, you are asked to get the XOR of them, i.e., $XOR_{x\in [s+l-1]\setminus [s-1]}x$, where $[s]={1,2,\cdots,s}$. An algorithm with complexity $O(l\log (s + l))$ is straightforward: XOR these numbers one by one (see the following pseudo-code).

def XOR(s, l):

x = 0

for s in xrange(s, s + l):

x ^= s # note, for general case, you have to implement your own XOR for long-bit integers

return x

Logarithmic Solution

Now, let’s see a logarithmic solution, i.e., an algorithm with complexity $O(\log (s + l))$.

As we know, XOR of multiple single-bit variable (i.e., $0$ or $1$) equals $1$ if the number of $1$’s is odd, otherwise $0$. Now, we decompose the XOR of $[s+l-1]\setminus [s-1]$ by bits, for the $i^{th}$ bit, we only need count how many 1’s. It appears that we still need $O(l)$ time to do the counting. However, the consecutiveness of these numbers make the appearance of $1$’s and $0$’s periodic. For example, for the $3^{rd}$ bit (from the lowest bit), roughly speaking the pattern is as follows.

\[\cdots,0,0,0,0,1,1,1,1,0,0,0,0,1,1,1,1,\cdots\]Of course, you need pay a little attention to the beginning and ending parts, since $l$ might not divide $2^3$.

Generally,

- for the $k^{th}$ ($k>1$) bit you only need to count how many $1$’s are they in the first and last partial period (if applicable). Since there are always even number of $1$’s in a full period.

- for the first bit, clearly $1$’s appear $\lfloor\frac{l - b}{2}\rfloor + b$ times

The following pseudo-code shows how to XOR the $i^{th}$ bit. Clearly, it can be done in $O(\log(s + l))$, as there are at most $\log (s + l)$ bits, therefore, this algorithm can calculate the XOR in $O(\log^2(s+l))$ time. Note that, before calculating the XOR bit-by-bit, you should first get these bits and after finishing the XOR, you should assemble these bits into a decimal number. Clearly, these two operations can be done in $O(\log(s+l))$.

# base = 2 ** i

# bit is the value of the i-th bit

# r[i] is the i-th bit for the result

if base == 1:

r[i] = ((l - bit) / 2 + bit) % 2

else:

period = 2 * base

preamble_len = period - bit * base - s % base

preamble = min(l, preamble_len)

if bit == 0:

preamble = max(0, preamble - (base - s % base))

padding = 0

if l > preamble_len:

padding_len = (l - preamble_len) % period

padding = padding_len - base if padding_len > base else 0

r[i] = (preamble + padding) % 2

Fastpass is a Datacenter network framework proposed by Jonathan Perry, et al.. Its authors published its codes, however, I encountered some issues while installing it on Ubuntu 16.04. Here is Long’s vagrant VM for building Fastpass.

-

Issue 1: gcc version issue

-

error:

include/linux/compiler-gcc.h:86:30: fatal error: linux/compiler-gcc5.h: No such file or directory - cause: the kernel version of my Ubuntu (i.e., Ubuntu 16.04) is higher than the kernel version used by fastpass (i.e., v3.10.25).

-

solution: before

make -j22addingecho "#include <linux/compiler-gcc4.h>" > include/linux/compiler-gcc5.h - source: compile-a-linux-2-6-kernel-module-with-newer-compiler

-

-

Issue 2: Berkeley DB issue

-

error:

E: Unable to locate package libdb6.0-dev E: Couldn't find any package by glob 'libdb6.0-dev' E: Couldn't find any package by regex 'libdb6.0-dev' - cause: it seems there is no package

libdb6.0-devfor Ubuntu 16.04 (I only foundlibdb6.0-devfor Ubuntu 14.04) - solution: replace

libdb6.0-devwithlibdb-dev(if you still see the similar error, then you might need add the source to your/etc/apt/source/lsit)

-

As one of the most popular modern version control tools, git is getting more and more popular. As a result, Github becomes a necessary part of programmers’ daily life. In this post, we will introduce some tricks to help programmers play with Github. These tricks are extremely useful, when you are dealing with multiple Github accounts (e.g., one for company codes, one for personal codes).

Avoid Password with SSH: Single Account Case

The first trick is to avoid entering password while pushing local repositories to remote or pulling/fetching private remote repositories.

Generating SSH Key

$ ssh-keygen -t rsa -b 4096

Generally, we will see outputs as follows.

Generating public/private rsa key pair.

Enter file in which to save the key (/home/mininet/.ssh/id_rsa): git_key_example

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in git_key_example.

Your public key has been saved in git_key_example.pub.

The key fingerprint is:

38:44:30:a8:a4:6f:eb:c1:66:12:bb:28:dd:bd:7c:29 mininet@mininet-vm

The key's randomart image is:

+--[ RSA 4096]----+

| .o.. |

| .. o |

|o. . |

|o . . |

|.. o S |

| +o . |

|oo=o . . |

|o=o...E o |

|+.. o+ |

+-----------------+

During the above process, you will be asked to

- enter the file name for your key pair (In the above example, we used

git_key_exampleas the file name.). If you only enter the file name, the key pair would be stored in current directory. If you want to store your key in somewhere else, you can add the full path of your file. - Enter a passphrase (If you use SSH out of security, then we strongly suggest you enter one. However, if you choose SSH just because you do not want to enter the password, then you are suggested to leave it empty. Because otherwise you will be asked to enter the same passphrase whenever you

git push.).

After the above process, you will find the key pair in the directory you enter. In the above example (assume current directory is /home/mininet/.ssh/), you will find the following two new files:

- git_key_example

- git_key_example.pub The first one is the private key and the second is the public key.

Upload to Github

-

Prepare (get the content of public key):

$ cat git_key_example.pub ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAACAQDEKiWm5RBjQJOsNmGi6GQNAqhoovXA/qVm1i+nyZ0j18kQkCaeTSdGDUs9UiV9ts7vG4MLHucRLI4dh5hvOH3gr0W7MhB8JubOptKJWtjGaw4f1Vb3xxdKGJ7igHA/ud2N5omkJIptyKXrvybLkqnkLcGgxttvqheOGczzC2g0MIa7MxqhlALkdj0QVyjrtbqltB+k0+lAqyeLRJGrWqmRZ8FlPn1KslpWKq+LFAuKNg24YF7HxbGubdVVeGsKTOowrDBN5IGdEJvlqwZsVUvGnx1oznJzpfdw80KCcm1vFmMU6E3oo3OLfgjhRvcfl5DV+OznTPPr3KbybNSAUIr5lR00LkBhKH0nxiHcERi9/E6/95/fVovY8vV38wPLXBCq/jkmQmtUFHQ86C/1f0bgAAano56Ovd2p7ur5x2pe+sCUxwcQQ3kYOpesqQhCvpkIA6kDGznsGiY1418sCj8D7na1MoifvzsPfpAbO8zAO0YtITEfaaiBum9i4GbzMTl4AzuLIff03XhPRHlew/9McG6DlKm04A96QmRbUZQHzdUyivOSpG7HCFBrYmiEga1EPV8WRRU8SJu0hftV0Upk2T46KASygvo5A0wZ6YUWytHhY8Sdtrx56y4mOtol3RkQtR/vUGCjxIbtl3Lw1J3xoxQkxynesUM8bopBgkwxiQ== mininet@mininet-vm -

Open the browser and go to

github.com, thenLog in

Find “SSH and GPG keys” in “Personal settings”

“New SSH key”

Paste the content of your public key.

Add Your SSH Key to ssh-agent

$ eval "$(ssh-agent -s)"

$ ssh-add /path/to/your_ssh_key

To avoid to enter the above commands every time after you restart your computer, you can add them in your bash configuration file (e.g., ~/.bashrc for Ubuntu, ~/.bash_profile for OS X).

Multiple Accounts Case

Assume you have two github account, for ease of presentation, we refer to them as A and B.

- Configure SSH keys for A and B, respectively by the above approach

-

Edit

~/.ssh/configand add the following sentences at the end of the file:Host github_A HostName github.com User git IdentityFile path/to/ssh-private-key-for-A Host github_B HostName github.com User git IdentityFile path/to/ssh-private-key-for-B -

Change

github.comto the corresponding host name you configured above. Suppose the remote repository associated with account A isgit@github.com:A/example.git:$ git remote add remote_A git@github_A:xlong88/publications.git $ ... $ git push remote_A masterSimilar for B.

Recently, I have learned about the move semantics of C++ 11 from Bo Qian’s YouTuBe Channel. As an “old boy” that is curious to all kinds of new techniques, I definitely would give myself a try.

Define a Class

First of all, I create the following C++ class (which is similar to the one used in Bo Qian’s Video), i.e.,, Note that, all “#include” are omitted.

class exampleA {

int *x;

int size;

public:

// default constructor

exampleA(): x(NULL), size(0) {

std::cout << "Default constructor is called" << std::endl;

}

// constructor from a std::vector

exampleA(const std::vector<int>& vec){

size = vec.size();

x = new int[size];

for (int i = 0;i < size;++ i) x[i] = vec[i];

std::cout << "Create exampleA object from vector" << std::endl;

}

// copy constructor

exampleA(const exampleA& other)

{

std::cout << "Copy constructor is called" << std::endl;

size = other.size;

if (size > 0) {

x = new int[size];

for (int i = 0;i < size;++ i) x[i] = other.x[i];

}else

{

x = NULL;

}

}

// move constructor

exampleA(exampleA&& other)

{

std::cout << "Move constructor is called" << std::endl;

size = other.size;

x = other.x;

other.size = 0;

other.x = NULL;

}

// deconstructor

~exampleA() {

if (x != NULL) delete [] x;

x = NULL;

size = 0;

std::cout << "Deconstructor is called" << std::endl;

}

// friend function: overloading operator <<

friend std::ostream& operator<<(std::ostream& os, const exampleA& a);

};

// definition of (or implementation of) overloading operator <<

std::ostream& operator<<(std::ostream& os, const exampleA& a){

for (int i = 0;i < a.size;++ i) os << a.x[i] << " ";

return os;

}

Define two Functions

Then, I defined a function that returns an exampleA object and another function that uses an object from the class exampleA as a parameter as follows.

// function to create an exampleA object

exampleA createObject(){

exampleA a(std::vector<int>(10, 1));

return a;

}

// function uses an exampleA object as a parameter

void passByValue(exampleA a) {

std::cout << a;

}

“Moment to Witness the Miracle”

Next, I though the moment to witness the miracle (Chinese: 见证奇迹的时刻) was coming. I created the following use cases to verify my understanding.

int main()

{

exampleA a(std::vector<int>(10, 1));

passByValue(a);

std::cout << "======================================\n\n";

passByValue(createObject());

return 0;

}

Before “witnessing the miracle”, let us first do some simple analysis to figure out what the “miracle” is. According to the above use cases, we first create an exampleA object – i.e., a – from an std::vector. Then we passed a to the function passbyValue by value, since a is a lvalue, we would expect the copy constructor to be called. And then, we passed a rvalue createObject()

to the function passbyValue, we would expect the move constructor to be called.

However, the following presents the output from running the above example (by using g++ -std=c++11 example.cpp -o example && ./example)

Create exampleA object from vector

Copy constructor is called

1 1 1 1 1 1 1 1 1 1

Deconstructor is called

======================================

Create exampleA object from vector

1 1 1 1 1 1 1 1 1 1

Deconstructor is called

Deconstructor is called

Unfortunately, we failed to witness the most important part of the miracle, i.e., the move semantics.

Dig Out of The Chief Culprit

After Google, Google, … and Google again, I eventually found “the chief culprit”. It is the copy elision feature of the compiler. Now, it is really the moment to witness the miracle. The following gives the final outputs after disabling the copy elision (add a flag -fno-elide-constructors, that is to run g++ -fno-elide-constructors -std=c++11 example.cpp -o example && ./example).

Create exampleA object from vector

Copy constructor is called

1 1 1 1 1 1 1 1 1 1

Deconstructor is called

======================================

Create exampleA object from vector

Move constructor is called

Deconstructor is called

Move constructor is called

1 1 1 1 1 1 1 1 1 1

Deconstructor is called

Deconstructor is called

Deconstructor is called

From the above outputs, you can see that the move constructor was called twice. First call was during the returning from the function createObject, and the other one is during passing value to the function passByValue.

In this post, we investigate how to efficiently delete certain elements from a Python list. More specifically, you want to delete certain elements, whose indices are given.

Solution A: Move to A New List

def removeA(myList, removalIndices):

if not isinstance(removalIndices, set):

removalIndices = set(removalIndices)

return [v for i, v in enumerate(myList) if not (i in removalIndices)]

This solution moves elements that do not need remove to a new list. Clearly, it would not be quite efficient when the number of removals is much less than the length of the original list. Besides, if the indices of the removals are not stored in a set but a list, the conversion from list to set would further drop the efficiency.

Solution B: Remove From The Last

def removeB(myList, removalIndices):

for removalIndex in removalIndices[::-1]:

del myList[removalIndex]

return myList

This solution removes the elements from the last (the one with the largest index) to the first (the one with the smallest index). This solution assumes that the indices of removals are sorted in an ascending order. Note that, removing from the first one is not a good idea, since each removal will change the indices of the remaining removals. In other words, for removals other than the first one, you have to recalculate the index before removing.

Solution C: Shift Removals Together Then Remove

def removeC(myList, removalIndices):

nextRemovalIndex = removalIndices[0]

indexOfRemovalIndices = 1

numOfRemovals = len(removalIndices)

lenOfList = len(myList)

currentIndex = nextRemovalIndex + 1

while currentIndex < lenOfList:

nextIndex = removalIndices[indexOfRemovalIndices] if indexOfRemovalIndices < numOfRemovals else lenOfList

while currentIndex < nextIndex:

myList[nextRemovalIndex] = myList[currentIndex]

nextRemovalIndex += 1

currentIndex += 1

indexOfRemovalIndices += 1

currentIndex += 1

# pay attention

myList[-numOfRemovals:] = []

return myList

This solution first shifts all the removals to the end of the list, and then removes them. Note that, this solution also assumes the indices of removals are sorted in an ascending order. Compare to Solution B, it guarantees that every element that needs move just moves once.

Which One Will Be More Efficient?

- Dense Cases (

myListhas $10^6$ elements, removing $80\%$)-

Random locations

Solution A (with

listrepresenting removals, i.e.,removalIndicesis alist): 159.12msSolution A (with

setrepresenting removals, i.e.,removalIndicesis aset): 134.69msSolution B: 1.13ms

Solution C: 132.39ms

Remarks: during the measurements, I found that the

setcreate from different status (e.g., sorted or unsorted) of alistwill have different efficiency. I will investigate this in details in future. -

From the beginning

Solution A (with

listrepresenting removals, i.e.,removalIndicesis alist): 187.58msSolution A (with

setrepresenting removals, i.e.,removalIndicesis aset): 133.30sSolution B: 7403.70ms

Solution C: 221.80ms

-

From the ending

Solution A (with

listrepresenting removals, i.e.,removalIndicesis alist): 133.34msSolution A (with

setrepresenting removals, i.e.,removalIndicesis aset): 142.03sSolution B: 0.74ms

Solution C: 121.12ms

-

- Sparse Cases (

myListhas $10^6$ elements, removing $0.2\%$)-

Random locations

Solution A (with

listrepresenting removals, i.e.,removalIndicesis alist): 134.81msSolution A (with

setrepresenting removals, i.e.,removalIndicesis aset): 149.77msSolution B: 216.96ms

Solution C: 159.68ms

-

From the beginning

Solution A (with

listrepresenting removals, i.e.,removalIndicesis alist): 150.39msSolution A (with

setrepresenting removals, i.e.,removalIndicesis aset): 153.45msSolution B: 2098.12ms

Solution C: 182.74ms

-

From the ending

Solution A (with

listrepresenting removals, i.e.,removalIndicesis alist): 131.15msSolution A (with

setrepresenting removals, i.e.,removalIndicesis aset): 134.47sSolution B: 204.69ms

Solution C: 163.19ms

-

TODO

- analyze the above results

- efficiency of set creating from different status of a list

- while loop versus splicing

TiKz provides a very efficient way for us to “program” our figures. However, sometimes you might only want to share your figures but not the source codes of your figures with other for some reasons (e.g., the source code uses a large amount of TiKzlibrary, other people might not have these libraries in the machine or you just want to make your codes private). In this post, we present a way to generate eps figure from TikZ source code (Of course, you can also generate a PDF and let others include that PDF).

LaTeX Code

\documentclass{article}

\usepackage{tikz}

%% put tikzlibrary below if necessary

% set up externalization

\usetikzlibrary{external}

\tikzset{external/system call={latex \tikzexternalcheckshellescape -halt-on-error

-interaction=batchmode -jobname "\image" "\texsource";

dvips -o "\image".ps "\image".dvi;

ps2eps "\image.ps"}}

\tikzexternalize

\begin{document}

%% put your tikz code below or input your tikz code via \input

\end{document}

Usage

latex -shell-escape LATEX_FILE_NAME

Please make sure you are using latex engine, other engines

will not work. Besides, please make sure all required tools

are installed.

SSH is a commonly used remote-access solution. The popularity of various cloud based solutions brings “some challenges” in the usage of SSH to remotely access the servers in the could. Because, you might have a lot of VMs in the cloud, and they might have different IP address/URL, username and passport. This would become a nightmare if you only know the “elementary” SSH commands.

In this post, we will introduce you some “advanced” SSH configurations. For ease of presentation, we assume you have two VMs in the cloud, their identities are as follows:

- A

- domain name: hostA.com

- username: userA

- password: passwdA

- B

- domain name: hostB.com

- username: userB

- password: passwdB

Note that, unless explicitly stated, we assume all the configurations are on your local computer.

Generate SSH key

Please refer to Play with Github

Upload SSH Public Key to VM A/B

Copy the content of your public key and add it to the end of the file ~/.ssh/authorized_keys on VM A (note that if the file does not exists, you need create one.).

Similarly, upload SSH public key to VM B.

Configure Local SSH

Add the following content at the end of your ssh configuration file, i.e., ~/.ssh/config

Host A

HostName hostA.com

User userA

IdentityFile path/to/ssh-private-key

Host B

HostName hostB.com

User userB

IdentityFile path/to/ssh-private-key

Now, you can remotely access VM A simply by ssh A.

More Advanced Tricks To Be Appeared

- Showing GUI

- Showing GUI from a VM behind NAT

- Creating SSH tunnels to “bypass” access gateway

This post details the steps for building and using a “LaTeX IDE” with Sublime Tex 2/3 and LaTeXTools. For ease of presentation, we will call sublime text 3 as ST3 for short, similarly we will use ST2 stands for sublime text 2.

Download and Installation

- Download ST3 for your operating system.

- Install

- Window/Mac: GUI install, just doubly check the file you downloaded, and follow the instructions.

- Ubuntu: here assume you have downloaded the deb installation file, open a terminal and type in the following command

sudo dpkg -i path/to/sublime_text_3_deb_file

Configuration

-

Manually (Semi-manually) install Package Control (Note that, Package Control is the only package that you need install manually. It seems that the latest version of ST3, you can also install this package like other packages.)

ctrl + `(command + `if you use Mac) to open the console, and copy the corresponding codes into the console and then pressenter.

-

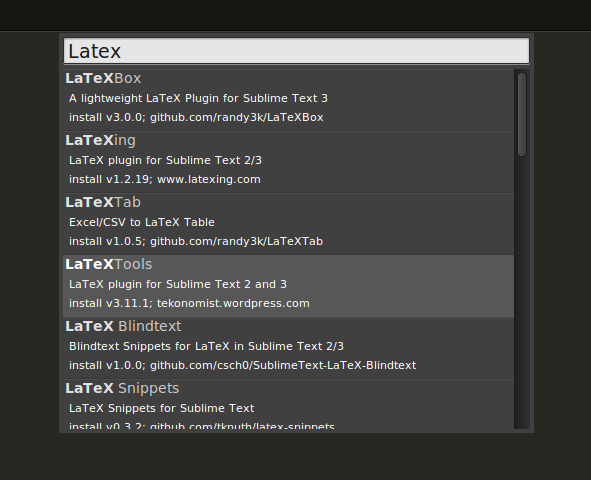

Install package LatexTools

ctrl + shift + p(command + shift + pif you use Mac) to open the command Platte for Package Control- Find and click “Package Control: Install Package”

- Enter “LaTexTools” to search and click on the “LaTexTools” to install

-

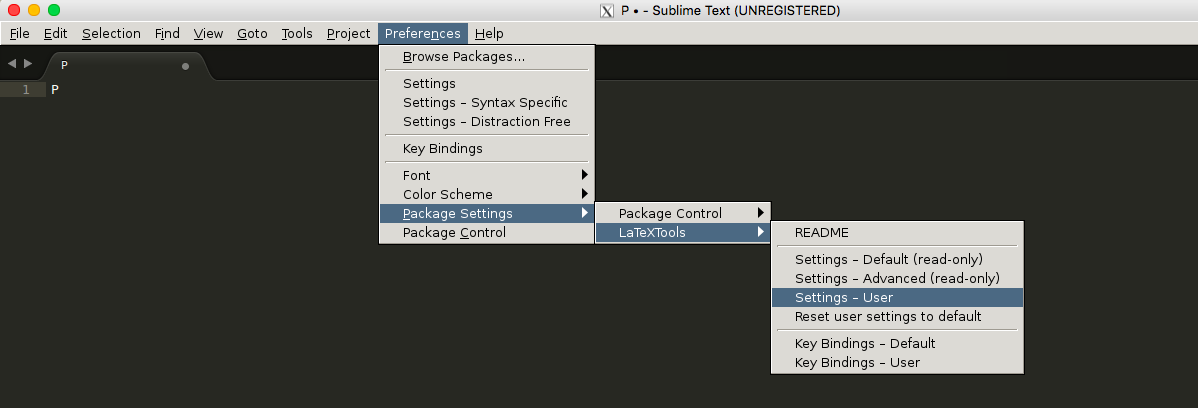

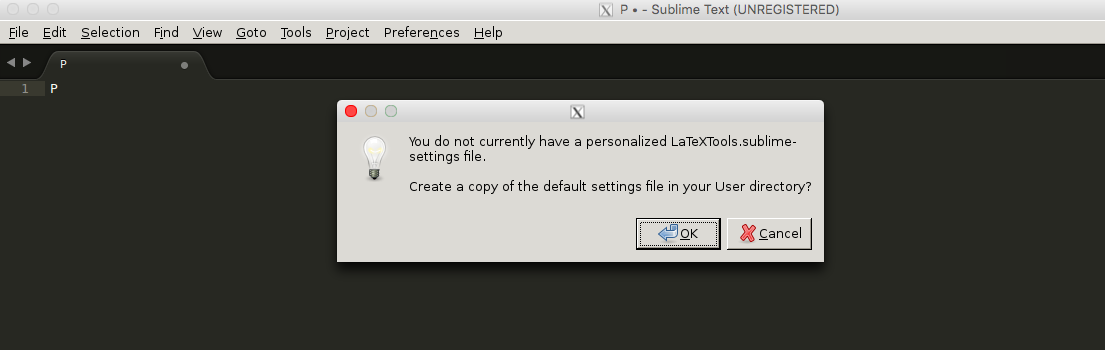

Configuration (optional only if you want to use the customized build script)

- Open the configuration for LaTeXTools and “OK” for all pop-up windows.

- Replace the content with this one

- Open the configuration for LaTeXTools and “OK” for all pop-up windows.

Remark: To use the above customized configuration, you need the following tools (note that, for the latest version of Ubuntu, all tools are built-in in the OS.)

- Ghostscript

- Windows

- Mac:

brew install ghostscript

- PDF Viewer

- Windows: SumatraPDF

- Mac: Skim

Usage

Please refer to LateXTools for Cross Reference in Multi-file LaTeX Projects

In this post, we will introduce a method to help you easily do cross reference in a multi-file latex project (i.e., a latex project where latex source files are distributed into multiple files). For example, the file structure of a multi-file latex project might look like:

path/to/your-latex-project/

main.tex

introduction.tex

related-work.tex

my-work.tex

evaluation.tex

conclusion.tex

reference.bib

figures/

In the above project, you might cross cite for example an algorithm which you define in the file my-work.tex in the file evaluation.tex. Without the assistance of some useful tools, you might have to check the file my-work.tex to make sure which label you have defined for that algorithm. And in some tools, they might automatically remind you all the labels you have defined in the current file, which means they cannot help you in the cross-file cross reference.

In this post, we will describe you an easy method to help you do the cross-file cross reference by using the LaTeXTools plug-in of Sublime Text. Note that in this post, we will focus on the configuration for cross-file cross reference. If you have no idea on how to install the LaTex Tools or even Sublime Text, please ask Google or some other search engine.

Create a Sublime Text Project

Create a sublime text project for your multi-file latex project. If you have no idea on how to create a project in sublime text 2/3, please refer to understanding-projects-in-sublime-text-saving-switching-etc.

Configure Your Project

After you create your project, you will see a auto-created file (named as your-project-name.sublime-project) in your root path of your multi-file latex project. Open the file, and the put the following configurations into the file.

"settings" : {

"TEXroot": "main.tex",

"tex_file_exts": [".tex"]

}

The above configuration assumes, the root latex file of your project is main.tex (if your project use another tex file, please change it correspondingly.). If you really want to make a full understanding of the above configurations, please refer to Project-Specific Settings of the Latex Tools.

The following is for those who are not so familiar with JSON, i.e., the format for the project setting file. Usually, before you do manual changes on the project setting file, its content looks as follows:

{

"folders":

[

{

"path": "."

}

]

}

After you add the configurations, your setting file would look as follows (please pay attention to the comma after the ], it cannot be omitted):

{

"folders":

[

{

"path": "."

}

],

"settings" : {

"TEXroot": "main.tex",

"tex_file_exts": [".tex"]

}

}

Please pay attention to the comma added after the close square bracket (i.e., ]).

Notice

If you have any suggestions or questions regarding this post, please lease your message in the comment session or send e-mail to mr.gonglong@outlook.com directly.

Running time measurement is very important when you want to know the empirical time complexity of your “algorithm” or when you want to provide some progress status of your running program (for example, how long will

your program continue before finish a specific task). In MATLAB, you can use tik tok; in Python, you can use timeit or time package; What about in C++. In this post, we will give a few macros for you to conduct the time

measurement task.

Run Time Measurement Macros for C++

#include <chrono>

#define TIMING

#ifdef TIMING

#define INIT_TIMER auto start = std::chrono::high_resolution_clock::now();

#define START_TIMER start = std::chrono::high_resolution_clock::now();

#define STOP_TIMER(name) std::cout << "RUNTIME of " << name << ": " << \

std::chrono::duration_cast<std::chrono::milliseconds>( \

std::chrono::high_resolution_clock::now()-start \

).count() << " ms " << std::endl;

#else

#define INIT_TIMER

#define START_TIMER

#define STOP_TIMER(name)

#endif

Please make sure that your compiler support C++11, and you do use C++11 or higher to compile your code. For example, if you want to compile your C++ code with C++11 by using g++, you can simply add the following flag: -std=c++11

An Example

The following presents a simple example, which uses the above macros to measure running time (it assumes that the above macros are defined in tm.h).

#include <iostream>

#include "tm.h"

inline int add(int a, int b) {

return a + b;

}

int main()

{

// initialize a timer

INIT_TIMER

int repeat_times = 10000000;

// start the time

START_TIMER

for (int i = 0;i < repeat_times;++ i) {

add(100, 10);

}

// stop the timer and printing out the time information

STOP_TIMER("adding two integers for repeating " + std::to_string(repeat_times) + " times")

return 0;

}

If you run the above code correctly, you should see an output similar like below:

$ RUNTIME of adding two integers for repeating 10000000 times: 19 ms

Git is becoming one of the most popular version control tools. In this post, we introduce some commonly used commands for playing with Git.

Fast Commit

$ git commit -a -m "commit message"

Remember Credential on Windows

- configure for storing your credential

$ git config credential.helper store

$ git push http://example.com/repo.git

Username: <type your username>

Password: <type your password>

several days later

$ git push http://example.com/repo.git

[your credentials are used automatically]

Reset Last Commit

$ git commit -m "Something terribly misguided" # your last commit

$ git reset --soft HEAD^ # reset

It is extremely useful, when you encounter LARGE file problems

Skip Changes in Certain Files

$ git update-index --no-skip-worktree <file>

$ git add -p <file>

$ git update-index --skip-worktree <file>

Ignore Certain Files

If you want skip (ignore) certain type of files, the following configuration can be applied: Edit file “.gitignore”, and add the types you want to ignore, for example,

# ignore thumbnails created by windows

Thumbs.db

# Ignore files build by Visual Studio

*.user

*.aps

*.pch

*.vspscc

*_i.c

*_p.c

*.ncb

*.suo

*.bak

*.cache

*.ilk

*.log

[Bb]in

[Dd]ebug*/

*.sbr

obj/

[Rr]elease*/

_ReSharper*/

Push Local Branch to New Remote Repository

Suppose you have made a empty repository and named it myapp.git, you can:

$ git remote add <branch_name> <repository_url>

where <branch_name> can be any valid name you want. Then, you can push your local branch to the newly created remote repository by using

$ git push <branch_name> master

Resolve Large File Failure Problem

Suppose you git push your local repository to Github, however it failed because of some large-size files. And you might continue to experience git push failure, even if you have removed or ignore such large files. You can use the following commands to resolve such a problem by deleting those files in your history.

$ git filter-branch --index-filter 'git rm -r --cached --ignore-unmatch <file/dir>' HEAD

Of course, by using the above solution, you cannot upload your large file to Github. Recently, Github had released a tool to help you handle with large file. More details, you can refer to An open source Git extension for versioning large files

As a convenient editor for TeX file under Windows, WinEdit is quite popular. However, if you do not get your WinEdit registered (of course, it is not free), you will get annoyed by the popup window, which gets appeared frequently after you have used WinEdit without registration for certain time period. This post provides a hack way to deal with it.

Find Configuration File “Exit.edt”

options -> options interfaces … -> Advanced Configuration … -> Event Handlers -> Exit

the file looks like this:

// WinEdt Exit (Cleanup) Macro

PushTagsandRegisters;

// CloseAppl("YAP"); // Close YAP if running?